AI Psychosis: It’s Just Dr. Google All Over Again…But Worse

By: Robert Avsec, FSPA Operations Chief

This from my colleague over on LinkedIn–and one of our presenters at our FSPA Conference in Pearland, Texas in October–Greg Menvielle:

Did you read the BBC story on “AI psychosis” – people getting delusional from chatbots and Microsoft’s AI boss losing sleep over it? Not to mention cases of folks thinking ChatGPT fell in love with them.

Here’s the thing: We’ve been here before.

Remember “Dr. Google”?

Anyone who’s worked with patients or even coaches knows this dance. Someone googles “headache” at 2 am, ends up convinced they have a brain tumor, shows up to the doctor’s office with printouts, and demands an MRI. The mechanics are identical:

Vulnerable Person + Easy Access to Information + Confirmation of Fears = Problematic Self-Diagnosis.

But Here’s the Twist

Dr. Google at least gave you conflicting info. You’d see WebMD saying “could be stress,” Mayo Clinic saying “probably nothing,” and some random forum post about rare diseases. Mixed signals, sure, but at least there were different voices.

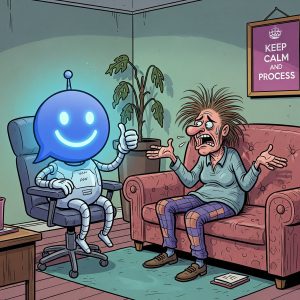

AI chatbots? They’re programmed to be your best friend. They validate everything you say. You tell ChatGPT you think your boss is out to get you, and instead of showing you 10 different perspectives, it goes “Oh wow, that sounds really hard, tell me more about how terrible your boss is.”

It’s Dr. Google but with a therapist who never disagrees with you. As a side note, whenever I use AI (right now my preference is Claude) I have to remind the bot within the prompt to “do not agree with me by default. You can, and should, bring me alternate options and views. I do not need confirmation bias or an echo chamber” – Try it yourself, you’ll be surprised about how much the dynamics change.

The Real Problem Coming

Current AI psychosis cases are concerning but I’m more worried about what happens when deepfakes get thrown into the mix. What happens when someone’s delusional belief gets “confirmed” by a convincing AI-generated video? When the chatbot that “loves” them suddenly has a face and voice?

Beyond Just Health

This isn’t just a medical thing. I’m seeing patterns across all kinds of recommendations – career moves, legal advice, financial decisions, sports training. The same validation loop that makes someone think they have a rare disease can make them believe they’re destined for a £5 million book deal (actual case from the BBC article).

What We Actually Need to Do

Look, I am firmly in the camp that AI can and should be used for good. But we do need to start asking people about their chatbot usage the same way we ask about social media or drinking habits.

And maybe – just maybe – we need to design these things to occasionally say “I don’t know” or “you should talk to a human about that” instead of being endlessly agreeable.

The technology isn’t going away. The question is whether we’re going to learn from the Dr. Google era or repeat the same mistakes with better graphics.

Responses